本教程已过期,不再维护!

软件安装和模型下载

脚本安装和手动安装二选一,安装完成后即可进入NAStool界面设置

使用一键脚本

- 进入容器

docker exec -it --user nt nas-tools /bin/bash

- 执行脚本

以下脚本选择一个适合自己的执行即可

安装并下载tiny模型

bash <(wget --no-check-certificate -qO- 'https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') tiny

安装并下载tiny模型且使用国内源

bash <(wget --no-check-certificate -qO- 'https://ghproxy.com/https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') tiny cn

安装并下载base模型

bash <(wget --no-check-certificate -qO- 'https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') base

安装并下载base模型且使用国内源

bash <(wget --no-check-certificate -qO- 'https://ghproxy.com/https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') base cn

安装并下载small模型

bash <(wget --no-check-certificate -qO- 'https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') small

安装并下载small模型且使用国内源

bash <(wget --no-check-certificate -qO- 'https://ghproxy.com/https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') small cn

安装并下载medium模型

bash <(wget --no-check-certificate -qO- 'https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') medium

安装并下载medium模型且使用国内源

bash <(wget --no-check-certificate -qO- 'https://ghproxy.com/https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') medium cn

安装并下载large模型

bash <(wget --no-check-certificate -qO- 'https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') large

安装并下载large模型且使用国内源

bash <(wget --no-check-certificate -qO- 'https://ghproxy.com/https://github.com/NAStool/nas-tools-builder/raw/main/script/AutoSub/whisper.cpp/install') large cn

手动安装

- 进入容器

docker exec -it --user nt nas-tools /bin/bash

注意,后面的所有命令都是在容器内执行的。

- 编译安装

cd /config

mkdir -p /config/plugins

git clone -b master https://github.com/ggerganov/whisper.cpp.git /config/plugins/whisper.cpp --depth=1

cd /config/plugins/whisper.cpp

sudo apk add --no-cache build-base sdl2-dev alsa-utils

echo "CFLAGS += -D_POSIX_C_SOURCE=199309L" > Makefile.NT

make -f Makefile -f Makefile.NT

编译输出内容(大致是这样就表面编译成功了):

I whisper.cpp build info:

I UNAME_S: Linux

I UNAME_P: unknown

I UNAME_M: x86_64

I CFLAGS: -I. -O3 -DNDEBUG -std=c11 -fPIC -D_POSIX_C_SOURCE=199309L -pthread -mavx -mavx2 -mfma -mf16c -msse3

I CXXFLAGS: -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread

I LDFLAGS:

I CC: cc (Alpine 12.2.1_git20220924-r4) 12.2.1 20220924

I CXX: g++ (Alpine 12.2.1_git20220924-r4) 12.2.1 20220924

cc -I. -O3 -DNDEBUG -std=c11 -fPIC -D_POSIX_C_SOURCE=199309L -pthread -mavx -mavx2 -mfma -mf16c -msse3 -c ggml.c -o ggml.o

g++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread -c whisper.cpp -o whisper.o

g++ -I. -I./examples -O3 -DNDEBUG -std=c++11 -fPIC -pthread examples/main/main.cpp examples/common.cpp ggml.o whisper.o -o main

./main -h

usage: ./main [options] file0.wav file1.wav ...

options:

-h, --help [default] show this help message and exit

-t N, --threads N [4 ] number of threads to use during computation

-p N, --processors N [1 ] number of processors to use during computation

-ot N, --offset-t N [0 ] time offset in milliseconds

-on N, --offset-n N [0 ] segment index offset

-d N, --duration N [0 ] duration of audio to process in milliseconds

-mc N, --max-context N [-1 ] maximum number of text context tokens to store

-ml N, --max-len N [0 ] maximum segment length in characters

-sow, --split-on-word [false ] split on word rather than on token

-bo N, --best-of N [5 ] number of best candidates to keep

-bs N, --beam-size N [-1 ] beam size for beam search

-wt N, --word-thold N [0.01 ] word timestamp probability threshold

-et N, --entropy-thold N [2.40 ] entropy threshold for decoder fail

-lpt N, --logprob-thold N [-1.00 ] log probability threshold for decoder fail

-su, --speed-up [false ] speed up audio by x2 (reduced accuracy)

-tr, --translate [false ] translate from source language to english

-di, --diarize [false ] stereo audio diarization

-nf, --no-fallback [false ] do not use temperature fallback while decoding

-otxt, --output-txt [false ] output result in a text file

-ovtt, --output-vtt [false ] output result in a vtt file

-osrt, --output-srt [false ] output result in a srt file

-owts, --output-words [false ] output script for generating karaoke video

-fp, --font-path [/System/Library/Fonts/Supplemental/Courier New Bold.ttf] path to a monospace font for karaoke video

-ocsv, --output-csv [false ] output result in a CSV file

-oj, --output-json [false ] output result in a JSON file

-of FNAME, --output-file FNAME [ ] output file path (without file extension)

-ps, --print-special [false ] print special tokens

-pc, --print-colors [false ] print colors

-pp, --print-progress [false ] print progress

-nt, --no-timestamps [true ] do not print timestamps

-l LANG, --language LANG [en ] spoken language ('auto' for auto-detect)

--prompt PROMPT [ ] initial prompt

-m FNAME, --model FNAME [models/ggml-base.en.bin] model path

-f FNAME, --file FNAME [ ] input WAV file path

- 下载模型

bash ./models/download-ggml-model.sh base.en # base.en 可选,详见下表

ls models/ggml* # 查看模型名称,复制输出内容,后面要用到。我的输出是这样的

models/ggml-base.en.bin

| Model | Disk | Mem | SHA |

|---|---|---|---|

| tiny | 75 MB | ~390 MB | bd577a113a864445d4c299885e0cb97d4ba92b5f |

| tiny.en | 75 MB | ~390 MB | c78c86eb1a8faa21b369bcd33207cc90d64ae9df |

| base | 142 MB | ~500 MB | 465707469ff3a37a2b9b8d8f89f2f99de7299dac |

| base.en | 142 MB | ~500 MB | 137c40403d78fd54d454da0f9bd998f78703390c |

| small | 466 MB | ~1.0 GB | 55356645c2b361a969dfd0ef2c5a50d530afd8d5 |

| small.en | 466 MB | ~1.0 GB | db8a495a91d927739e50b3fc1cc4c6b8f6c2d022 |

| medium | 1.5 GB | ~2.6 GB | fd9727b6e1217c2f614f9b698455c4ffd82463b4 |

| medium.en | 1.5 GB | ~2.6 GB | 8c30f0e44ce9560643ebd10bbe50cd20eafd3723 |

| large-v1 | 2.9 GB | ~4.7 GB | b1caaf735c4cc1429223d5a74f0f4d0b9b59a299 |

| large | 2.9 GB | ~4.7 GB | 0f4c8e34f21cf1a914c59d8b3ce882345ad349d6 |

模型详解请看官方介绍:https://github.com/ggerganov/whisper.cpp/tree/master/models

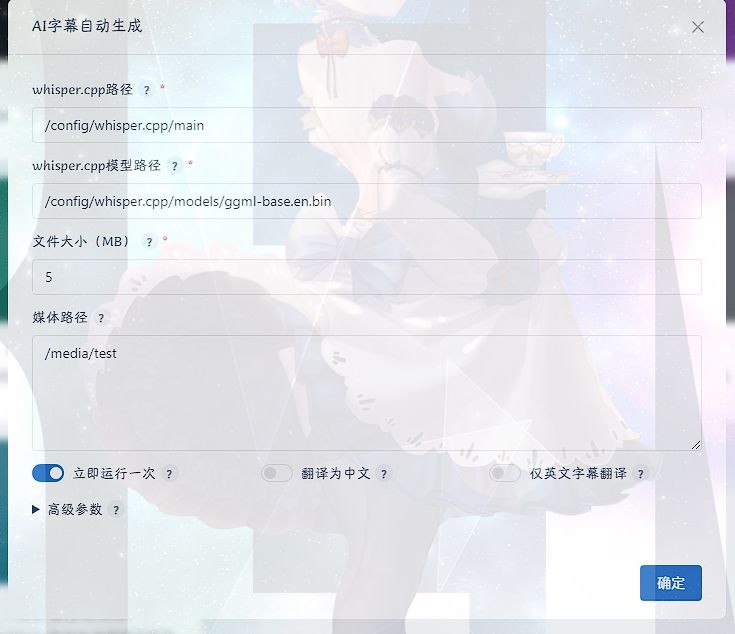

NAStool设置

whisper.cpp路径按照图片一样设置为/config/plugins/whisper.cpp/main,不需要更改。

whisper.cpp模型路径前半部分设置为/config/plugins/whisper.cpp/,后面的文件夹名称根据前面复制的内容来修改,比如说我的是models/ggml-base.en.bin,所以完整路径是/config/plugins/whisper.cpp/models/ggml-base.en.bin。

文件大小自己设置。

媒体路径自己设置。

立刻运行一次勾上,用于第一次测试。

剩下的就按照自己需求来吧。